From ancient Egypt to modern Russia, rulers have tried to build new capitals from the ground up.

March 5, 2020

In 1420, the Yongle Emperor moved China’s capital from Nanjing to the city then known as Beiping. To house his government he built the Forbidden City, the largest wooden complex in the world, with more than 70 palace compounds spread across 178 acres. Incredibly, an army of 100,000 artisans and one million laborers finished the project in only three years. Shortly after moving in, the emperor renamed the city Beijing, “northern capital.”

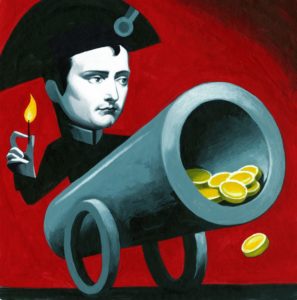

The monument of Peter the Great in St. Petersburg, Russia.

PHOTO: GETTY IMAGES

In the 600 years since, countless visitors have marveled at Yongle’s creation. As its name suggests, the Forbidden City could only be entered by permission of the emperor. But the capital city built around it was an impressive symbol of imperial power and social order, a kind of 3-D model of the harmony of the universe.

Beijing’s success and longevity marked an important leap forward in the history of purpose-built cities. The earliest attempts at building a capital from scratch were usually hubristic affairs that vanished along with their founders. Nothing remains of the fabled Akkad, commissioned by Sargon the Great in the 24th century B.C. following his victory over the Sumerians.

But the most vainglorious and ultimately futile capital ever constructed must surely be Akhetaten, later known as Amarna, on the east bank of the Nile River in Egypt. It was built by the Pharaoh Akhenaten around 1346 B.C. to serve as a living temple to Aten, the god of the sun. The pharaoh hoped to make Aten the center of a new monotheistic cult, replacing the ancient pantheon of Egyptian deities. In his eyes, Amarna was a glorious act of personal worship. But to the Egyptians, this hastily erected city of mud and brick was an indoctrination camp run by a crazed fanatic. Neither Akhenaten’s religion nor his city long survived his death.

In 330 B.C., Alexander the Great was responsible for one of the worst acts of cultural vandalism in history when he allowed his army to burn down Persepolis, the magnificent Achaemenid capital founded by Darius I, in revenge for the Persian destruction of Athens 150 years earlier.

Ironically, the year before he destroyed a metropolis in Persia, the Macedonian emperor had created one in Egypt. Legend has it that Alexander chose the site of Alexandria after being visited by the poet Homer in a dream. He may also have been influenced by the advantages of the location, near the island of Pharos on the Mediterranean coast, which boasted two harbors as well as a limitless supply of fresh water. Working closely with the famed Greek architect Dinocrates, Alexander designed the walls, city quarters and street grid himself. Alexandria went on to become a center of Greek and Roman civilization, famous for its library, museum and lighthouse.

No European ruler would rival the urban ambitions of Alexander the Great, let alone Emperor Yongle, until Tsar Peter the Great. In 1703, he founded St. Petersburg on the marshy archipelago where the Neva River meets the Baltic Sea. His goal was to replace Moscow, the old Russian capital, with a new city built according to modern, Western ideas. Those ideas were unpopular with Russia’s nobility, and after Peter’s death his successor moved the capital back to Moscow. But in 1732 the Romanovs transferred their court permanently to St. Petersburg, where it remained until 1917. Sometimes, the biggest urban-planning dreams actually come true.