Charting the southern continent took generations of heroic sacrifice and international cooperation.

January 14, 2021

There is a place on Earth that remains untouched by war, slavery or riots. Its inhabitants coexist in peace, and all nationalities are welcomed. No, it’s not Neverland or Shangri-La—it’s Antarctica, home to the South Pole, roughly 20 million penguins and a transient population of about 4,000 scientists and support staff.

Antarctica’s existence was only confirmed 200 years ago. Following some initial sightings by British and Russian explorers in January 1821, Captain John Davis, a British-born American sealer and explorer, landed on the Antarctic Peninsula on Feb. 7, 1821. Davis was struck by its immense size, writing in his logbook, “I think this Southern Land to be a Continent.” It is, in fact, the fifth-largest of Earth’s seven continents.

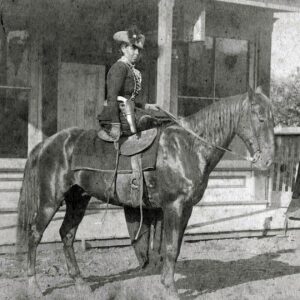

Herbert Ponting is attacked by a penguin during the 1911 Scott expedition in Antarctica.

PHOTO: HERBERT PONTING/SCOTT POLAR RESEARCH INSTITUTE, UNIVERSITY OF CAMBRIDGE/GETTY IMAGES

People had long speculated that there had to be something down at the bottom of the globe—in cartographers’ terms, a Terra Australis Incognita (“unknown southern land”). The ancient Greeks referred to the putative landmass as “Ant-Arktos,” because it was on the opposite side of the globe from the constellation of Arktos, the Bear, which appears in the north. But the closest anyone came to penetrating the freezing wastes of the Antarctic Circle was Captain James Cook, the British explorer, who looked for a southern continent from 1772-75. He got within 80 miles of the coast, but the harshness of the region convinced Cook that “no man will ever venture further than I have done.”

Davis proved him wrong half a century later, but explorers were unable to make further progress until the heroic age of Antarctic exploration in the early 20th century. In 1911, the British explorer Robert F. Scott led a research expedition to the South Pole, only to be beaten by the Norwegian Roald Amundsen, who misled his backers about his true intentions and jettisoned scientific research for the sake of getting there quickly.

Extraordinarily bad luck led to the deaths of Scott and his teammates on their return journey. In 1915, Ernest Shackleton led a British expedition that aimed to make the first crossing of Antarctica by land, but his ship Endurance was trapped in the polar ice. The crew’s 18-month odyssey to return to civilization became the stuff of legend.

Soon exploration gave way to international competition over Antarctica’s natural resources. Great Britain marked almost two-thirds of the continent’s landmass as part of the British Empire, but a half dozen other countries also staked claims. In 1947 the U.S. joined the fray with Operation High Jump, a U.S. Navy-led mission to establish a research base that involved 13 ships and 23 aircraft.

Antarctica’s freedom and neutrality were in question during the Cold War. But in 1957, a group of geophysicists managed to launch a year-long Antarctic research project involving 12 countries. It was such a success that two years later the countries, including the U.S., the U.K. and the USSR, signed the Antarctic Treaty, guaranteeing the continent’s protection from militarization and exploitation. This goodwill toward men took a further 20 years to extend to women, but in 1979 American engineer Irene C. Peden became the first woman to work at the South Pole for an entire winter.