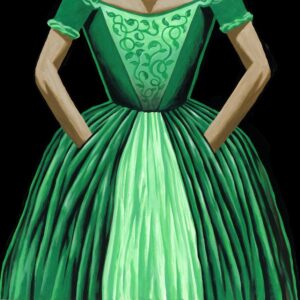

Unlike men’s clothes, female fashion has been indifferent for centuries to creating ways for women to stash things in their garments

September 29, 2022

The current round of Fashion Weeks started in New York on Sep. 9 and will end in Paris on Oct. 4, with London and Milan slotted in between. Amid the usual impractical and unwearable outfits on stage, some designers went their own way and featured—gasp—women’s wear with large pockets.

The anti-pocket prejudice in women’s clothing runs deep. In 1954, the French designer Christian Dior stated: “Men have pockets to keep things in, women for decoration.” Designers seem to think that their idea of how a woman should look outweighs what she needs from her clothes. That mentality probably explains why a 2018 survey found that 100% of the pockets in men’s jeans were large enough to fit a midsize cellphone, but only 40% of women’s jeans pockets measured up.

The pocket is an ancient idea, initially designed as a pouch that was tied or sewn to a belt beneath a layer of clothing. Otzi, the 5,300-year-old ice mummy I wrote about recently for having the world’s oldest known tattoos, also wore an early version of a pocket; it contained his fire-starting tools.

ILLUSTRATION: THOMAS FUCHS

The ancient concept was so practical that the same basic design was still in use during the medieval era. Attempts to find other storage solutions usually came up short. In the 16th century a man’s codpiece sometimes served as an alternative holdall, despite the awkwardness of having to fish around your crotch to find things. Its fall from favor at the end of the 1600s coincided with the first in-seam pockets for men.

It was at this stage that the pocket divided into “his” and “hers” styles. Women retained the tie-on version; the fashion for wide dresses allowed plenty of room to hang a pouch underneath the layers of petticoats. But it was also impractical since reaching a pocket required lifting the layers up.

Moralists looked askance at women’s pockets, which seemed to defy male oversight and could potentially be a hiding place for love letters, money and makeup. On the other hand, in the 17th century a maidservant was able to thwart the unwelcome advances of the diarist Samuel Pepys by grabbing a pin from her pocket and threatening to stab him with it, according to his own account.

Matters looked up for women in the 18th century with the inclusion of side slits on dresses that allowed them direct access to their pockets. Newspapers began to carry advertisements for articles made especially to be carried in them. Sewing kits and snuff boxes were popular items, as were miniature “conversation cards” containing witty remarks “to create mirth in mixed companies.”

Increasingly, though, the essential difference between men’s and women’s pockets—his being accessible and almost anywhere, hers being hidden and nestled near her groin—gave them symbolism. Among the macabre acts committed by the Victorian serial killer Jack the Ripper was the disemboweling of his victims’ pockets, which he left splayed open next to their bodies.

Women had been agitating for more practical dress styles since the formation of the Rational Dress Society in Britain in 1881, but it took the upheavals caused by World War I for real change to happen. Women’s pantsuits started to appear in the 1920s. First lady Eleanor Roosevelt caused a sensation by appearing in one in 1933. The real revolution began in 1934, with the introduction of Levi’s bluejeans for women, 61 years after the originals for men. The women’s front pocket was born. And one day, with luck, it will grow up to be the same size as men’s.

“Top Gun” is back. The 1986 film about Navy fighter pilots is getting a sequel next year, with Tom Cruise reprising his role as Lt. Pete “Maverick” Mitchell, the sexy flyboy who can’t stay out of trouble. Judging by the trailer released by Paramount in July, the new movie, “Top Gun: Maverick,” will go straight to the heart of current debates about the future of aerial combat. An unseen voice tells Mr. Cruise, “Your kind is headed for extinction.”

“Top Gun” is back. The 1986 film about Navy fighter pilots is getting a sequel next year, with Tom Cruise reprising his role as Lt. Pete “Maverick” Mitchell, the sexy flyboy who can’t stay out of trouble. Judging by the trailer released by Paramount in July, the new movie, “Top Gun: Maverick,” will go straight to the heart of current debates about the future of aerial combat. An unseen voice tells Mr. Cruise, “Your kind is headed for extinction.”