I used not to believe in the “midlife crisis”. I am ashamed to say that I thought it was a convenient excuse for self-indulgent behaviour — such as splurging on a Lamborghini or getting buttock implants. So I wasn’t even aware that I was having one until earlier this year, when my family complained that I had become miserable to be around. I didn’t shout or take to my bed, but five minutes in my company was a real downer. The closer I got to my 50th birthday, the more I radiated dissatisfaction.

Can you be simultaneously contented and discontented? The answer is yes. Surveys of “national wellbeing” in several countries, including the UK, by the Office for National Statistics have revealed a fascinating U-curve in relation to happiness and age. In Britain, feelings of stress and anxiety appear to peak at 49 and subsequently fade as the years increase. Interestingly, a 2012 study showed that chimpanzees and orang-utans exhibited a similar U-curve of happiness as they reach middle age.

On a rational level, I wasn’t the least bit disappointed with my life. The troika of family, work and friends made me very happy. And yet something was eating away at my peace of mind. I regarded myself as a failure — not in terms of work but as a human being. Learning that I wasn’t alone in my daily acid bath of gloom didn’t change anything.

One of F Scott Fitzgerald’s most memorable lines is: “There are no second acts in American lives.” It’s so often quoted that it’s achieved the status of a truism. It’s often taken to be an ironic commentary on how Americans, particularly men, are so frightened of failure that they cling to the fiction that life is a perpetual first act. As I thought about the line in relation to my own life, Fitzgerald’s meaning seemed clear. First acts are about actions and opportunities. There is hope, possibility and redemption. Second acts are about reactions and consequences.

Old habits die hard, however. I couldn’t help conducting a little research into Fitzgerald’s life. What was the author of The Great Gatsby really thinking when he wrote the line? Would it even matter?

The answer turned out to be complicated. As far as the quotation goes, Fitzgerald actually wrote the reverse. The line appears in a 1935 essay entitled My Lost City, about his relationship with New York: “I once thought that there were no second acts in American lives, but there was certainly to be a second act to New York’s boom days.”

It reappeared in the notes for his Hollywood novel, The Love of the Last Tycoon, which was half finished when he died in 1940, aged 44. Whatever he had planned for his characters, the book was certainly meant to have been Fitzgerald’s literary comeback — his second act — after a decade of drunken missteps, declining book sales and failed film projects.

Fitzgerald may not have subscribed to the “It’s never too late to be what you might have been” school of thought, but he wasn’t blind to reality. Of course he believed in second acts. The world is full of middle-aged people who successfully reinvented themselves a second or even third time. The mercurial rise of Emperor Claudius (10BC to AD54) is one of the earliest historical examples of the true “second act”.

According to Suetonius, Claudius’s physical infirmities had made him the butt of scorn among his powerful family. But his lowly status saved him after the assassination of his nephew, Caligula. The plotters found the 56-year-old Claudius cowering behind a curtain. On the spur of the moment, instead of killing him, as they did Caligula’s wife and daughter, the plotters decided the stumbling and stuttering scion of the Julio-Claudian dynasty could be turned into a puppet emperor. It was a grave miscalculation. Claudius seized on his changed circumstances. The bumbling persona was dropped and, although flawed, he became a forceful and innovative ruler.

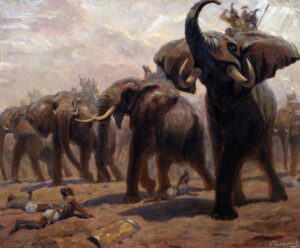

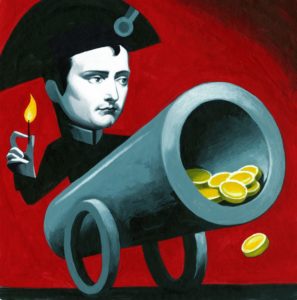

Mostly, however, it isn’t a single event that shapes life after 50 but the willingness to stay the course long after the world has turned away. It’s extraordinary how the granting of extra time can turn tragedy into triumph. In his heyday, General Mikhail Kutuzov was hailed as Russia’s greatest military leader. But by 1800 the 55-year-old was prematurely aged. Stiff-limbed, bloated and blind in one eye, Kutuzov looked more suited to play the role of the buffoon than the great general. He was Alexander I’s last choice to lead the Russian forces at the Battle of Austerlitz in 1805, but was the first to be blamed for the army’s defeat.

Kutuzov was relegated to the sidelines after Austerlitz. He remained under official disfavour until Napoleon’s army was halfway to Moscow in 1812. Only then, with the army and the aristocracy begging for his recall, did the tsar agree to his reappointment. Thus, in Russia’s hour of need it ended up being Kutuzov, the disgraced general, who saved the country.

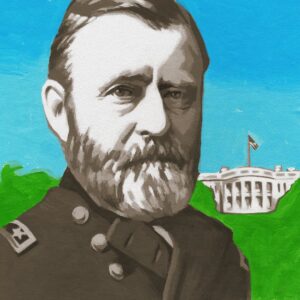

Winston Churchill had a similar apotheosis in the Second World War. For most of the 1930s he was considered a political has-been by friends and foes alike. His elevation to prime minister in 1940 at the age of 65 changed all that, of course. But had it not been for the extraordinary circumstances created by the war, Robert Rhodes James’s Churchill: A Study in Failure, 1900-1939 would have been the epitaph rather than the prelude to the greatest chapter in his life.

It isn’t just generals and politicians who can benefit from second acts. For writers and artists, particularly women, middle age can be extremely liberating. The Booker prize-winning novelist Penelope Fitzgerald published her first book at 59 after a lifetime of teaching while supporting her children and alcoholic husband. Thereafter she wrote at a furious pace, producing nine novels and three biographies before she died at 83.

I could stop right now and end with a celebratory quote from Morituri Salutamus by the American poet Henry Wadsworth Longfellow: “For age is opportunity no less/ than youth itself, though in another dress, / And as the evening twilight fades away / The sky is filled with stars, invisible by day.”

However, that isn’t — and wasn’t — what was troubling me in the first place. I don’t think the existential anxieties of middle age are caused or cured by our careers. Sure, I could distract myself with happy thoughts about a second act where I become someone who can write a book a year rather than one a decade. But that would still leave the problem of the flesh-and-blood person I had become in reality. What to think of her? It finally dawned on me that this had been my fear all along: it doesn’t matter which act I am in; I am still me.

My funk lifted once the big day rolled around. I suspect that joining a gym and going on a regular basis had a great deal to do with it. But I had also learnt something valuable during these past few months. Worrying about who you thought you would be or what you might have been fills a void but leaves little space for anything else. It’s coming to terms with who you are right now that really matters.