The winding, millennia-long route from bark to Bayer.

February 1, 2024

For ages the most reliable medical advice was also the most simple: Take two aspirin and call me in the morning. This cheap pain reliever, which also thins blood and reduces inflammation, has been a medicine cabinet staple ever since it became available over the counter nearly 110 years ago.

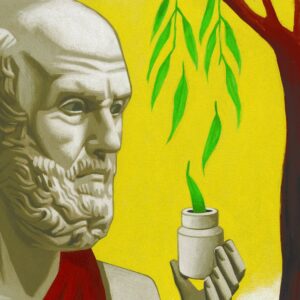

Willow bark, a distant ancestor of aspirin, was a popular ingredient in ancient remedies to relieve pain and treat skin problems. Hippocrates, the father of medicine, was a firm believer in willow’s curative powers. For women with gynecological troubles in the fourth century B.C., he advised burning the leaves “until the steam enters the womb.”

That willow bark could reduce fevers wasn’t discovered until the 18th century. Edward Stone, an English clergyman, noticed its extremely bitter taste was similar to that of the cinchona tree, the source of the costly malaria drug quinine. Stone dried the bark and dosed himself to treat a fever. When he felt better, he tested the powder on others suffering from “ague,” or malaria. When their fevers disappeared, he reported triumphantly to the Royal Society in 1763 that he had found another malaria cure. In fact, he had identified a way to treat its symptoms.

Willows contain salicin, a plant hormone with anti-inflammatory, fever-reducing and pain-relieving properties. Experiments with salicin, and its byproduct salicylic acid, began in earnest in Europe in the 1820s. In 1853 Charles Frédéric Gerhardt, a French chemist, discovered how to create acetylsalicylic acid, the active ingredient in aspirin, but then abandoned his research and died young.

There is some debate over how aspirin became a blockbuster drug for the German company Bayer. Its official history credits Felix Hoffmann, a Bayer chemist, with synthesizing acetylsalicylic acid in 1897 in the hopes of alleviating his father’s severe rheumatic pain. Bayer patented aspirin in 1899 and by 1918 it had become one of the most widely used drugs in the world.

ILLUSTRATION: THOMAS FUCHS

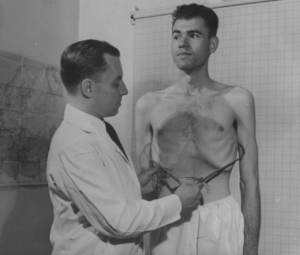

But did Hoffman work alone? Shortly before his death in 1949, Arthur Eichengrün, a Jewish chemist who had spent World War II in a concentration camp, published a paper claiming that Bayer had erased his contribution. In 2000 the BMJ published a study supporting Eichengrün’s claim. Bayer, which became part of the Nazi-backing conglomerate I.G. Farben in 1925, has denied that Eichengrün had a role in the breakthrough.

Aspirin shed its associations with the Third Reich after I.G. Farben sold off Bayer in the early 1950s, but the drug’s pain-relieving hegemony was fleeting. By 1956 Bayer’s British affiliate brought acetaminophen to the market. Ibuprofen became available in 1962.

The drug’s fortunes recovered after the New England Journal of Medicine published a study in 1989 that found the pill reduced the threat of a heart attack by 44%. Some public-health officials promptly encouraged anyone over 50 to take a daily aspirin as a preventive measure.

But as with the case with Rev. Stone, it seems the science is more complicated. In 2022 the U.S. Preventive Services Task Force officially advised against taking the drug prophylactically, given the risk of internal bleeding and the availability of other therapies. Aspirin may work wonders, but it can’t work miracles.