The “Inferno” brought human complexity to the medieval conception of the afterlife

September 30, 2021

What is hell? For Plato, it was Tartarus, the lowest level of Hades where those who had sinned against the gods suffered eternal punishment. For Jean-Paul Sartre, the father of existentialism, hell was other people. For many travelers today, it is airport security.

No depiction of hell, however, has been more enduring than the “Inferno,” part one of the “Divine Comedy” by Dante Alighieri, the 700th anniversary of whose death is commemorated this year. Dante’s hell is divided into nine concentric circles, each one more terrifying and brutal than the last until the frozen center, where Satan resides alongside Judas, Brutus and Cassius. With Virgil as his guide, Dante’s spiritually bereft and depressed alter ego enters via a gate bearing the motto “Abandon all hope, ye who enter here”—a phrase so ubiquitous in modern times that it greets visitors to Disney’s Pirates of the Caribbean ride.

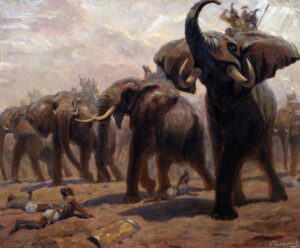

The inscription was a Dantean invention, but the idea of a physical gate separating the land of the living from a desolate one of the dead was already at least 3,000 years old: In the Sumerian Epic of Gilgamesh, written around 2150 B.C., two scorpionlike figures guard the gateway to an underworld filled with darkness and dust.

ILLUSTRATION: THOMAS FUCHS

The underworld of the ancient Egyptians was only marginally less bleak. Seven gates blocked the way to the Hall of Judgment, according to the Book of the Dead. Getting through them was arduous and fraught with failure. The successful then had to submit to having their hearts weighed against the Feather of Truth. Those found wanting were thrown into the fire of oblivion.

Zoroastrianism, the official religion of the ancient Persians, was possibly the first to divide the afterlife into two physically separate places, one for good souls and the other for bad. This vision contrasted with the Greek view of Hades as the catchall for the human soul and the early Hebrew Bible’s description of Sheol as a shadowy pit of nothingness. In the 4th century B.C., Alexander the Great’s Macedonian empire swallowed both Persia and Judea, and the three visions of the afterlife commingled. “hell” would then appear frequently in Greek versions of the New Testament. But the word, scholars point out, was a single translation for several distinct Hebrew terms.

Early Christianity offered more than one vision of hell, but all contained the essential elements of Satan, sinners and fire. The “Apocalypse of Peter,” a 2nd century text, helped start the trend of listing every sadistic torture that awaited the wicked.

Dante was thus following a well-trod path with his imaginatively crafted punishments of boiling pitch for the dishonest and downpours of icy rain on the gluttonous. But he deviated from tradition by describing Hell’s occupants with psychological depth and insight. Dante’s narrator rediscovers the meaning of Christian truth and love through his encounters. In this way the Inferno speaks to the complexities of the human condition rather than serving merely as a literary zoo of the dammed.

The “Divine Comedy” changed the medieval world’s conception of hell, and with it, man’s understanding of himself. Boccaccio, Chaucer, Milton, Balzac —the list of writers directly inspired by Dante’s vision goes on. “Dante and Shakespeare divide the world between them,” wrote T.S. Eliot. “There is no third.”